Deploy Your Own SolrCloud to Kubernetes for Fun and Profit

One of the big dependencies Sitecore has is Apache Solr (not SOLR or Solar) which it uses for search. Solr is a robust and battle-tested search platform but it can be a little hairy and much like a lot of open source software, it’ll run on Windows but really feels more at home on Linux.

So if you’re running Sitecore and you’re hosted in the cloud, you’ve got a couple of options for hosting Solr:

- Deploy a bunch of VMs

- Use a managed service (SearchStax, et al.)

- Run it on a cloud service (e.g. Kubernetes)

Obviously, since it’s me, we’re going hard mode and running this thing on Kubernetes.

Surprisingly, there’s not a whole ton of documentation out there on how to set up a basic multinode SolrCloud in Kubernetes. Most of the stuff out there requires some crazy set of CRDs or custom images or something else that seemed…excessive. I just want to run the official Apache containers for Solr and Zookeeper that they publish!

First, we’ll need to understand how Solr and Zookeeper do “load balancing” because it’s a little atypical. Normally for load balancing, you would have a bunch of instances and then a separate instance to receive traffic and distribute across the instances. Well, with Solr, any of the nodes can receive queries (which is good) but the nodes do their own communication with each other to distribute the load since your cores are sharded across your instances.

The Design

The intention behind this was to be as simple and stripped down as possible - still be able to scale to multiple instances of both Zookeeper and Solr, use the default Docker Hub images, and just spin up a basic Solr instance to support Sitecore.

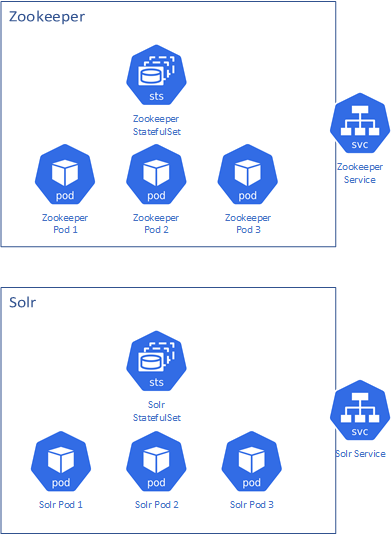

It’ll look something like this:

Okay, so we really just need one StatefulSet for Zookeeper and one StatefulSet for Solr. The StatefulSet is similar to the Kubernetes Deployment but it’s intended for, well, stateful applications. That is, you have some storage mechanism backing your pod so that data can be persisted across restarts. Each instance of the pod gets its own storage and managed it accordingly.

Since we’ll have multiple replicas in our StatefulSet, we’ll also need a Service as the network entrypoint.

The Quirks

This is all good and fine, but due to the nature of Kubernetes and containerized applications, there are a couple of things we need to accommodate for:

Zookeeper instance IDs

Each Zookeeper instance in a cluster needs its own ID, from 1-254. In a typical non-containerized scenario, these IDs are assigned as a file called myid in the configuration - in each instance, you would hardcode a different number on each instance. If you wanted to add another instance, you’d have to set the myid file for the new instance to be the next number up (or another number you weren’t using).

The Docker container for Zookeeper tries to mitigate this by creating an environment variable called ZOO_MY_ID to allow for setting the ID at runtime. This means we need to set the environment variable dynamically based on which instance of the replica is starting up. Fortunately, Kubernetes provides something called the Downward API which allows us to pull pod information into environment variables.

The Code

Here are the Kubernetes configs to set up your own SolrCloud Kubernetes instance! Check out the comments to understand what the pieces do and feel free to take it and modify it to fit your needs.

Find the below and more in my GitHub repo for a sample Sitecore XP0 deployment.

Zookeeper

https://github.com/georgechang/sitecore-k8s/blob/main/zookeeper/zookeeper-statefulset.yaml

# this allows for max 1 instance of ZK to be unavailable - should be updated based on the number of instances being deployed to maintain a quorum

apiVersion: policy/v1beta1

kind: PodDisruptionBudget

metadata:

name: zookeeper-pdb

namespace: solr

spec:

selector:

matchLabels:

app: zookeeper

maxUnavailable: 1

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: zookeeper-statefulset

namespace: solr

labels:

app: zookeeper

spec:

replicas: 3 # set this to the number of Zookeeper pod instances you want

updateStrategy:

type: RollingUpdate

podManagementPolicy: Parallel

selector:

matchLabels:

app: zookeeper

serviceName: zookeeper-service

template:

metadata:

labels:

app: zookeeper

spec:

nodeSelector:

kubernetes.io/os: linux

agentpool: solr

affinity:

# this causes K8s to only schedule only one Zookeeper pod per node

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: "app"

operator: In

values:

- zookeeper

topologyKey: "kubernetes.io/hostname"

containers:

- name: zookeeper

image: zookeeper:3.4

env:

- name: ZK_REPLICAS

value: "3" # informs Zookeeper of the number of intended replicas

- name: ZK_TICK_TIME

value: "2000"

- name: ZOO_4LW_COMMANDS_WHITELIST

value: "mntr,conf,ruok"

- name: ZOO_STANDALONE_ENABLED

value: "false"

# lists all of the Zookeeper servers that are part of this cluster

- name: ZOO_SERVERS

value: server.1=zookeeper-statefulset-0.zookeeper-service:2888:3888;2181 server.2=zookeeper-statefulset-1.zookeeper-service:2888:3888;2181 server.3=zookeeper-statefulset-2.zookeeper-service:2888:3888;2181

- name: ZOO_CFG_EXTRA

value: "quorumListenOnAllIPs=true electionPortBindRetry=0" # quorumListenOnAllIPs allows ZK to listen on all IP addresses for leader election/follower, electionPortBindRetry disables the max retry count as other ZK instances are spinning up

ports:

- name: client

containerPort: 2181

protocol: TCP

- name: server

containerPort: 2888

protocol: TCP

- name: election

containerPort: 3888

protocol: TCP

volumeMounts:

- name: zookeeper-pv

mountPath: /data

livenessProbe:

# runs a shell script to ping the running local Zookeeper instance, which responds with "imok" once the instance is ready

exec:

command:

- sh

- -c

- 'OK=$(echo ruok | nc 127.0.0.1 2181); if [ "$OK" = "imok" ]; then exit 0; else exit 1; fi;'

initialDelaySeconds: 20

timeoutSeconds: 5

readinessProbe:

# runs a shell script to ping the running local Zookeeper instance, which responds with "imok" once the instance is ready

exec:

command:

- sh

- -c

- 'OK=$(echo ruok | nc 127.0.0.1 2181); if [ "$OK" = "imok" ]; then exit 0; else exit 1; fi;'

initialDelaySeconds: 20

timeoutSeconds: 5

initContainers:

# each ZK instance requires an ID specification - since we can't set the ID using env variables, this init container sets the ID for each instance incrementally through a file on a volume mount

- name: zookeeper-id

image: busybox:latest

command:

- sh

- -c

- echo $((${HOSTNAME##*-}+1)) > /data-new/myid

volumeMounts:

- name: zookeeper-pv

mountPath: /data-new

volumeClaimTemplates:

- metadata:

name: zookeeper-pv

spec:

accessModes:

- ReadWriteOnce

storageClassName: managed-premium

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper-service

namespace: solr

labels:

app: zookeeper

spec:

ports:

- port: 2888

name: server

- port: 3888

name: leader-election

- port: 2181

name: client

clusterIP: None

selector:

app: zookeeper

Solr

https://github.com/georgechang/sitecore-k8s/blob/main/solr/solr-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: solr-statefulset

namespace: solr

labels:

app: solr

spec:

replicas: 3 # set this to the number of Solr pod instances you want

selector:

matchLabels:

app: solr

serviceName: solr-service

template:

metadata:

labels:

app: solr

spec:

securityContext:

runAsUser: 1001

fsGroup: 1001

nodeSelector:

kubernetes.io/os: linux

agentpool: solr

affinity:

# this causes K8s to only schedule only one Solr pod per node

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: "app"

operator: In

values:

- solr

topologyKey: "kubernetes.io/hostname"

containers:

- name: solr

image: solr:8.4

env:

# ZK_HOST lists all of the hostnames for all of the Zookeeper instances - this should correspond to however many ZK instances you have running.

- name: ZK_HOST

value: zookeeper-statefulset-0.zookeeper-service:2181,zookeeper-statefulset-1.zookeeper-service:2181,zookeeper-statefulset-2.zookeeper-service:2181, etc

- name: SOLR_JAVA_MEM

value: "-Xms4g -Xmx4g" # set the JVM memory usage and limit

ports:

- name: solr

containerPort: 8983

volumeMounts:

- name: solr-pvc

mountPath: /var/solr

livenessProbe:

# runs a built-in script to check for Solr readiness/liveness

exec:

command:

- /bin/bash

- -c

- "/opt/docker-solr/scripts/wait-for-solr.sh"

initialDelaySeconds: 20

timeoutSeconds: 5

readinessProbe:

# runs a built-in script to check for Solr readiness/liveness

exec:

command:

- /bin/bash

- -c

- "/opt/docker-solr/scripts/wait-for-solr.sh"

initialDelaySeconds: 20

timeoutSeconds: 5

initContainers:

# runs a built-script to wait until all Zookeeper instances are up and running

- name: solr-zk-waiter

image: solr:8.4

command:

- /bin/bash

- "-c"

- "/opt/docker-solr/scripts/wait-for-zookeeper.sh"

env:

- name: ZK_HOST

value: zookeeper-statefulset-0.zookeeper-service:2181,zookeeper-statefulset-1.zookeeper-service:2181,zookeeper-statefulset-2.zookeeper-service:2181

# runs a built-in script to initialize Solr instance if necessary

- name: solr-init

image: solr:8.4

command:

- /bin/bash

- "-c"

- "/opt/docker-solr/scripts/init-var-solr"

volumeMounts:

- name: solr-pv

mountPath: /var/solr

volumeClaimTemplates:

- metadata:

name: solr-pvc

spec:

accessModes:

- ReadWriteOnce

storageClassName: managed-premium

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: Service

metadata:

name: solr-service

namespace: solr

labels:

app: solr

spec:

type: LoadBalancer

ports:

- port: 8983

selector:

app: solr